GPT-5: Is It Really a Revolution or Just a Marketing Trick?

OpenAI's New Model Failed to Meet High Expectations

OpenAI's New Model Failed to Meet High Expectations

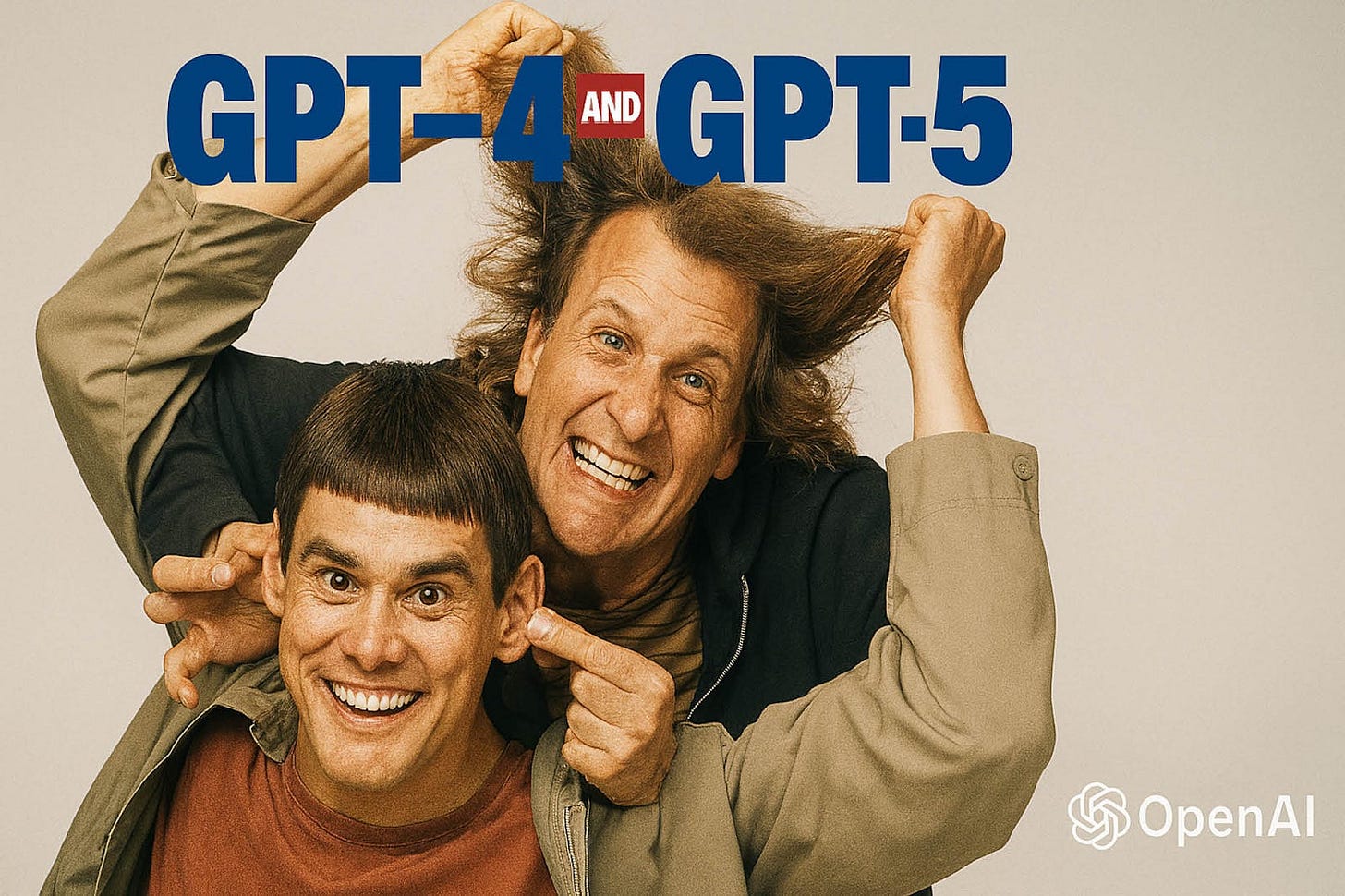

Last week, when I was writing about how Anthropic was doing much better than OpenAI in business use, it was clear that OpenAI would soon introduce their new model GPT-5. Sam Altman was making very bold statements about it. So while writing, I did think about whether the competition might start again if these promises were real. But after the launch, when I read user comments and tried some tests myself, I couldn't help but say "the mountain gave birth to a mouse." Let me write what I planned to say from the beginning. GPT-5 felt like buying a new iPhone after a few years. On one hand, they say it has improved a lot in many ways. On the other hand, it's still just an iPhone.

What Was Promised?

Before GPT-5 - maybe to excite the geeks - they introduced 2 open source models. You can easily use these models called gpt-oss-120b and gpt-oss-20b on your own computer. Let's give credit where it's due, this was nice. Especially gpt-oss-20b can even work on a normal laptop. It doesn't need a special graphics card. The bigger model naturally needs an Nvidia card. Still, these are good developments.

OpenAI CEO Sam Altman introduced GPT-5 as "the world's best model." GPT-5's main promises included important improvements compared to earlier models. At the launch, Michelle Pokrass from OpenAI also clearly confirmed that GPT-5 was better than GPT-4. According to Eric Mitchell, also from OpenAI, GPT-5 was ahead of GPT-4 in several key areas like better reasoning (thinking) and better writing (creativity).

At the launch, they talked a lot about how its coding abilities left all competitors behind, and they showed different test results to users.

Dreams and Reality

After the launch, GPT-5 was opened to all users - including free members - which let everyone try it out. Actually, they deserve thanks here too. This way, everyone could use and evaluate it themselves without being exposed to comments from members who pay $200 monthly and can't say "the emperor has no clothes" to avoid looking foolish.

The general opinion is that it's not as good as they claimed. We wouldn't be wrong to say it's even behind Anthropic's Sonnet 4. In fact, one of the biggest complaints after the GPT-5 launch was the removal of previous models like GPT-4o, 4.1, 4.5, and o3. It's not clear why they did this.

The AMA (ask me anything) event on Reddit, where Sam Altman also participated, was filled with user complaints. Old users said that their workflows and specific dynamics created with GPT-4o were broken with the new model, and they even felt that "years of work and dedication had gone to waste," which is a big problem.

Data scientist Colin Fraser even shared screenshots showing that GPT-5 solved basic math and algebra problems incorrectly (!). Developers also expressed their anger to Sam Altman, saying that GPT-5 performed worse than Anthropic's Claude Opus 4.1 model in some one-time programming tasks. So this Reddit event turned into a nightmare for Altman.

It was explained that some of the problems came from the new automatic "router" system that assigns user requests to one of four GPT-5 variants (normal, mini, nano, and pro). So people who wanted to use GPT-5, which was opened even to free users, were apparently classified and directed to different models. But an error here might have directed everyone to one of the smallest versions.

My Tests

After reading these comments, I wanted to see the situation with my own eyes. I had both Anthropic Sonnet 4 and GPT-5 do a 5-item project consisting of a simple interface and simple coding tasks through Windsurf separately. I also saw that Sonnet 4 is still the best for coding. GPT-5's Low Reasoning model, which can be used for free on Windsurf, is really in bad shape. The High Reasoning model, which is used for a fee, is better but still fails compared to Sonnet 4. Of course, I can't say it's "good" or "bad" with this test, but I won't give up Sonnet 4 in the short term.

OpenAI's Strategic Pricing

Notice that OpenAI opened this model to everyone on the same day. Even simple variants can be used completely free. It was announced that the GPT-5 API would charge $1.25 per million input tokens and $10 per million output tokens. This pricing is close to Google Gemini 2.5 Pro but significantly cheaper than Anthropic's Claude Opus 4.1; Claude Opus 4.1 starts at $15 per million input tokens and $75 per million output tokens. GPT-5 is even cheaper than GPT-4o. This pricing strategy has the potential to start a price war in the large language models market. Maybe they want to regain the corporate market they lost. But it's a bit difficult to do this just by lowering prices.

I Look at What You Do, Not What You Say

Sam Altman and his team kept expectations very high before the launch. The launch was overshadowed by the sudden removal of old models and intense user complaints about perceived performance decline. The company also accepted this and promised to bring back at least GPT-4o. Yes, this is also a positive approach. But it seems that OpenAI is about to become the Apple of the artificial intelligence world. The constantly rising line has become flat. Unfortunately, fancy launches don't cover this up. At this rate, far from winning back the corporate market, they seem likely to mess up what they have left.

What are your opinions on this matter? Did you get a chance to test it? I'm waiting for your comments.

See you in the next article.